Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

In the fast-paced world of financial markets, speed, accuracy, and data-driven strategies have become critical for success. This is where algorithmic trading comes into play—a technique that uses computer programs to execute trades based on predefined criteria. By eliminating emotional decision-making and increasing execution efficiency, algorithmic trading has transformed how institutions and individual traders approach the market.

As financial data grows more complex and abundant, the need for smarter, adaptive trading strategies has never been greater. Enter FinRL, an open-source framework designed to bring deep reinforcement learning (DRL) into the realm of quantitative finance. Developed by AI4Finance, FinRL bridges the gap between cutting-edge AI research and real-world trading applications, making it easier for developers, researchers, and quants to build and test intelligent trading agents.

But what makes DRL such a game-changer in the financial world? Unlike traditional machine learning models that rely on static datasets, DRL learns dynamically by interacting with the environment. In trading, this means adapting to shifting market conditions, learning from past actions, and continuously improving strategies over time. When paired with FinRL’s user-friendly tools and powerful libraries, DRL enables the creation of automated trading systems that can not only survive—but thrive—in today’s volatile markets.

With FinRL leading the charge, deep reinforcement learning is no longer just a theoretical concept. It’s becoming a practical tool for creating smarter, more profitable trading systems—and it’s reshaping the future of finance in the process.

FinRL is an open-source library that brings deep reinforcement learning (DRL) to the world of quantitative finance. Launched in 2020 by the AI4Finance Foundation, FinRL was born out of academic research aimed at exploring how intelligent agents can learn and adapt to financial markets. What started as a research-driven initiative quickly gained traction among data scientists, traders, and fintech developers seeking more powerful and flexible tools for building automated trading strategies.

One of the key differentiators of FinRL is its strong academic foundation. The project has been featured in top-tier publications and widely adopted by universities, financial institutions, and AI researchers. It integrates the latest advancements in machine learning and finance, offering users a robust platform to experiment with, simulate, and deploy DRL-based trading models.

FinRL’s primary goal is simple yet ambitious: to make deep reinforcement learning accessible and practical for financial applications. While DRL has shown impressive results in fields like robotics and gaming, applying it to trading involves unique challenges—such as noisy data, non-stationary environments, and the need for real-time decision-making.

FinRL addresses these challenges by providing:

By lowering the barrier to entry, FinRL empowers both beginners and experts to leverage reinforcement learning for building intelligent trading agents. It’s not just a library—it’s a full ecosystem designed to fast-track research, development, and deployment of AI-powered financial strategies.

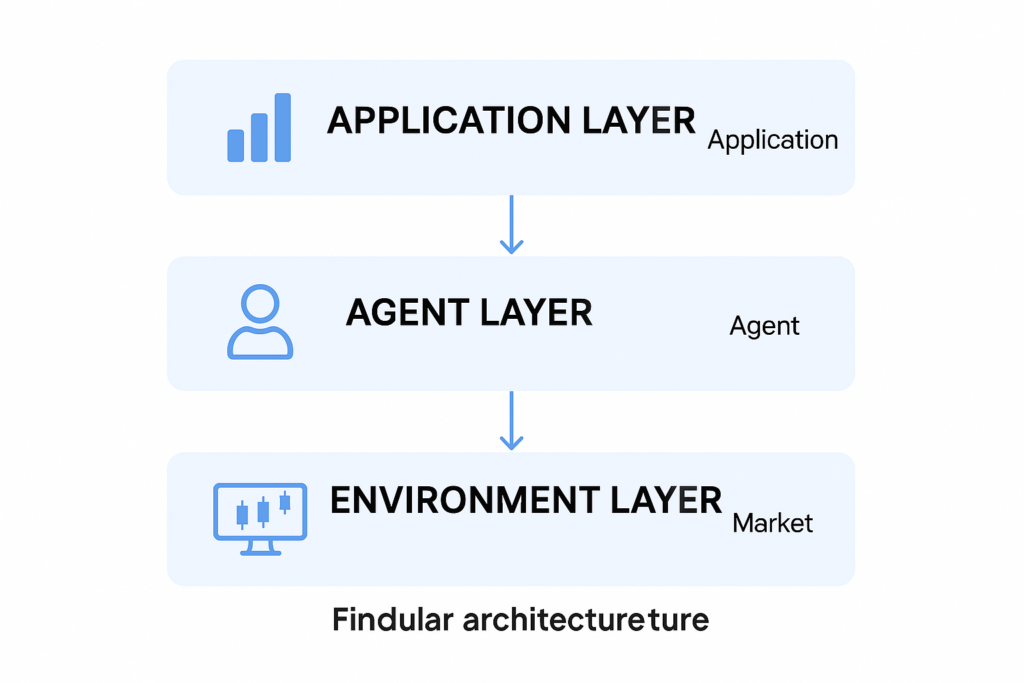

One of FinRL’s biggest strengths lies in its modular architecture, which is designed to offer flexibility and customization for users at all levels. The framework is divided into three core layers:

This separation of concerns makes it easier to customize individual components—whether you’re tweaking the market environment, adjusting reward functions, or experimenting with new learning algorithms.

FinRL comes equipped with a suite of pre-implemented deep reinforcement learning algorithms widely used in financial applications. Some of the most popular ones include:

These algorithms are already fine-tuned to work with financial time series data, so users can focus more on strategy design and less on technical setup.

Financial data is notoriously noisy and diverse. To handle this, FinRL provides a robust data preprocessing pipeline that supports multiple asset classes like stocks, ETFs, and cryptocurrencies. Users can pull data from popular sources including:

Once the data is cleaned and structured, users can train their models and use FinRL’s built-in backtesting tools to evaluate performance over historical market conditions. This makes it easier to test hypotheses, compare strategies, and refine results before going live.

FinRL also stands out with its wide compatibility across trading platforms and APIs. Whether you’re a researcher using Jupyter Notebooks or a developer looking to integrate with a trading platform, FinRL has you covered. It supports:

This ecosystem approach makes FinRL not just a tool, but a bridge between research and real-world deployment.

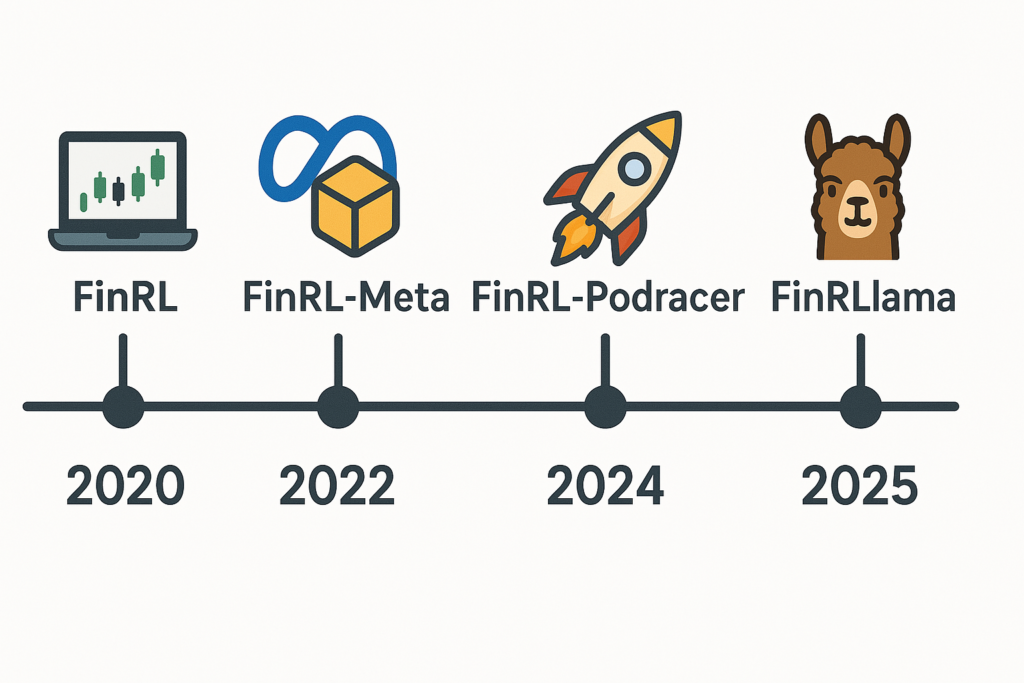

The journey of FinRL began in 2020, when it was first introduced as an open-source framework to make deep reinforcement learning accessible for finance. The initial version focused on providing basic DRL agents and market environments tailored to stock trading. Despite being early-stage, it quickly drew attention from academics, developers, and fintech innovators who were eager to explore AI-driven trading.

In 2021, FinRL took a significant leap forward by introducing a three-layer architecture—application, agent, and environment layers. This modular structure allowed for clearer separation between the strategy configuration, learning algorithm, and market simulation. As a result, users gained more control, better organization, and increased flexibility when building and testing custom trading strategies.

By 2022, the FinRL team recognized the challenges of applying DRL to real-world financial data—such as the low signal-to-noise ratio, overfitting during backtesting, and the lack of standard benchmarks. The solution? FinRL-Meta—a meta-learning version of the platform that introduced standardized financial environments, data sets, and evaluation benchmarks. It enabled more reliable and reproducible research, making it easier for users to compare different strategies under realistic conditions.

In 2024, FinRL launched FinRL-Podracer, a high-performance extension built to scale training and deployment of trading agents. Podracer introduced a CI/CD-style pipeline for DRL, enabling continuous training, hyperparameter optimization, and fast deployment of strategies. This upgrade empowered users to go from experimentation to production much more efficiently—crucial for firms looking to bring AI-powered strategies to live markets.

The most recent breakthrough came in 2025 with FinRLlama, a cutting-edge innovation born from the FinRL Contest 2024. This version combined the power of large language models (LLMs) like ChatGPT with DRL agents, allowing models to generate and refine trading signals using natural language market insights. FinRLlama marked a step toward multi-modal intelligence in finance, blending text-based reasoning with adaptive decision-making.

One of the most impactful applications of FinRL is in portfolio optimization. DRL agents can learn how to dynamically allocate capital across multiple assets, adjusting positions in response to market trends, risk factors, and return targets. Unlike static models, FinRL-powered agents continuously improve and adapt, making portfolio management more responsive and intelligent.

Speed and precision are everything in high-frequency trading—and that’s where FinRL shines. By training DRL models to act on short-term market signals, users can build agents capable of executing trades in milliseconds. Combined with FinRL-Podracer’s scalable architecture, developers can test and deploy HFT strategies with low latency and high accuracy.

FinRL also enables smarter risk management and hedging, thanks to its environment-driven learning. Agents can be trained to minimize downside risk, adjust positions during volatility spikes, or maintain market neutrality through dynamic hedging. This is especially valuable for institutions managing large portfolios exposed to complex market conditions.

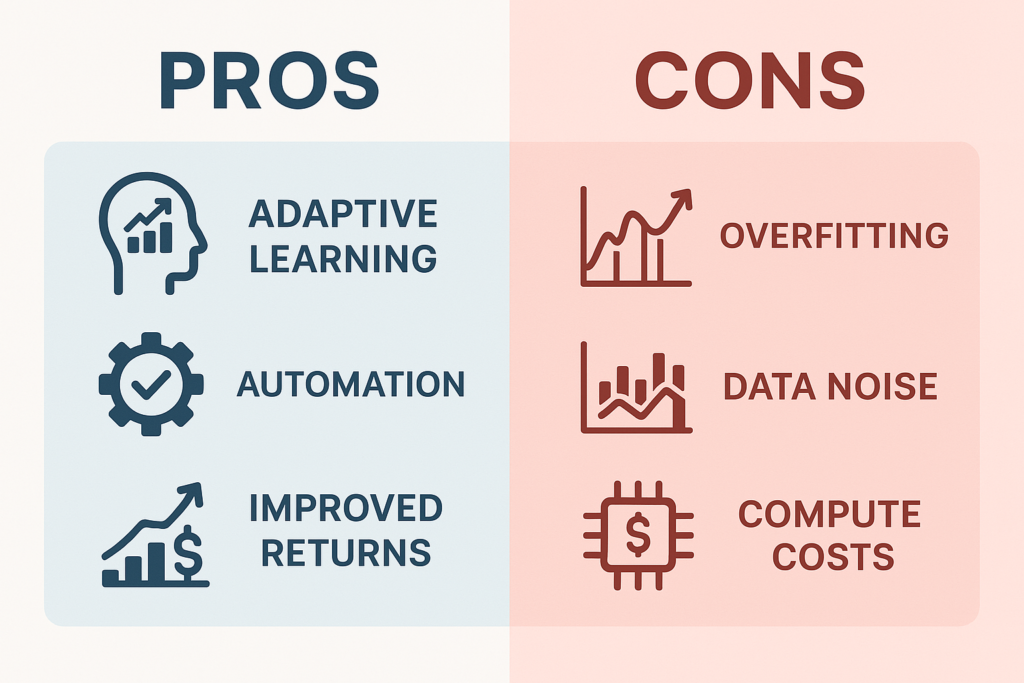

FinRL brings a range of powerful benefits to the table, making it a valuable tool for anyone working in the financial AI space:

While FinRL offers cutting-edge capabilities, it’s not without its drawbacks:

Despite these challenges, ongoing improvements in model architecture, validation techniques, and hardware acceleration are gradually addressing these limitations.

Traditional quantitative trading strategies often rely on rule-based logic, statistical analysis, or machine learning classifiers built on historical indicators. While effective to some extent, these approaches assume that past patterns will repeat—an assumption that doesn’t always hold in dynamic markets.

In contrast, FinRL uses DRL to learn through interaction, not just observation. The agent receives rewards or penalties based on actions it takes in simulated environments. This allows it to develop context-aware decision-making abilities that go beyond fixed rules or regression models.

What truly sets FinRL apart is its flexibility and extensibility. Users can:

This modular approach gives FinRL an edge over rigid legacy systems and allows developers to push the boundaries of what algorithmic trading can achieve.

As FinRL continues to evolve, several exciting trends are shaping the future of DRL in finance:

FinRL’s success is also deeply tied to its open-source community, which contributes new features, research papers, and educational resources. This collaborative effort is driving faster innovation and making advanced financial tools accessible to a global audience.

From academic research to live trading applications, the FinRL ecosystem is thriving, and its role in shaping the future of algorithmic trading is only just beginning.

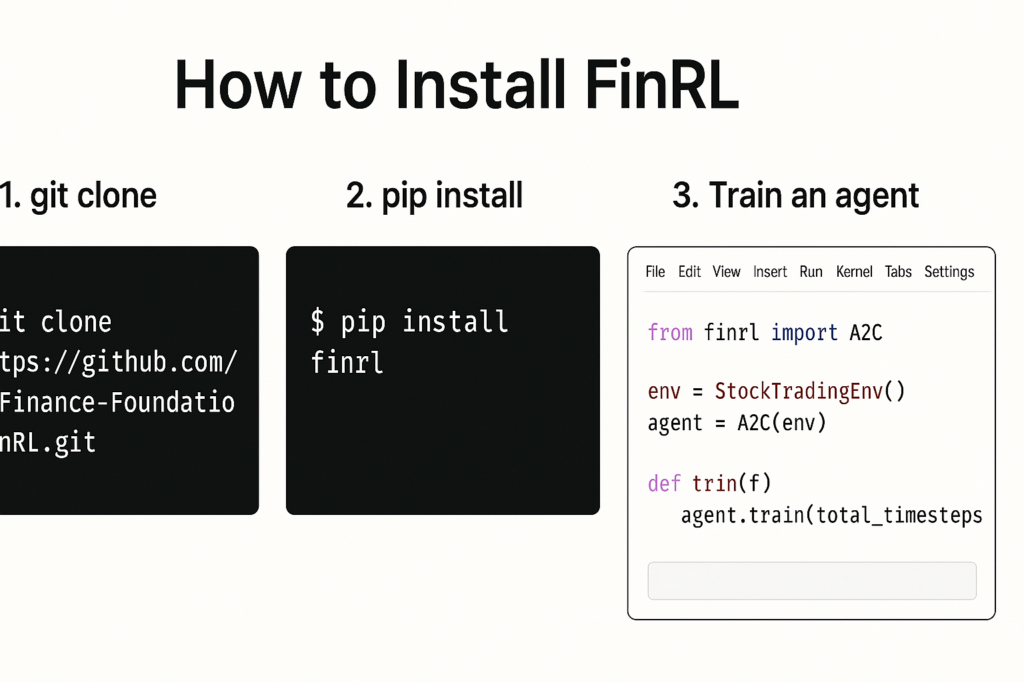

Getting started with FinRL is easier than you might think. The framework is Python-based and well-documented, making it accessible even to those with limited machine-learning experience. Here’s a quick rundown of how to set it up:

git clone https://github.com/AI4Finance-Foundation/FinRL.git cd FinRLpip or conda to install dependencies: bashCopyEditpip install -r requirements.txtHere’s a basic example of training a DRL agent on stock data using FinRL:

pythonCopyEditfrom finrl import config, config_tickers

from finrl.marketdata.yahoodownloader import YahooDownloader

from finrl.preprocessing.preprocessors import FeatureEngineer

from finrl.env.env_stocktrading import StockTradingEnv

from finrl.agents.stablebaselines3_models import DRLAgent

# Download and preprocess data

df = YahooDownloader(start_date='2020-01-01', end_date='2023-01-01', ticker_list=['AAPL']).fetch_data()

fe = FeatureEngineer(use_technical_indicator=True, use_turbulence=True, user_defined_feature=False)

df = fe.preprocess_data(df)

# Set up the environment

env = StockTradingEnv(df=df, **env_kwargs)

agent = DRLAgent(env=env)

# Train using PPO

model = agent.get_model("ppo")

trained_model = agent.train_model(model=model, tb_log_name="ppo_run", total_timesteps=50000)

This simple pipeline gives you a fully trained DRL agent using Proximal Policy Optimization (PPO) to trade Apple stock over historical data.

FinRL provides excellent resources to guide you through every step:

As algorithmic trading continues to evolve, FinRL has positioned itself as a pioneer in merging deep reinforcement learning with real-world financial applications. From its humble academic beginnings to powering advanced trading systems, FinRL has democratized access to financial AI tools that were once reserved for elite hedge funds and research labs.

By simplifying complex DRL workflows and making them accessible through open-source tools, FinRL is enabling a new generation of quants, developers, and financial enthusiasts to build intelligent, data-driven trading strategies. Whether you’re optimizing a portfolio, managing risk, or exploring DeFi opportunities, FinRL offers the flexibility, power, and community support to help you succeed.

As the field of financial AI advances, one thing is clear: FinRL is not just part of the trend—it’s shaping the future of how we invest, trade, and think about finance.